Can You Use A Knn Rechargr Kit On S&b Filter

Text Classification using K Nearest Neighbors

We'll define K Nearest Neighbor algorithm for text classification with Python. KNN algorithm is used to allocate past finding the K nearest matches in training information and then using the label of closest matches to predict. Traditionally, distance such as euclidean is used to detect the closest friction match.

For Text Nomenclature, we'll use nltk library to generate synonyms and use similarity scores among texts. We'll identify the K nearest neighbors which has the highest similarity score among the training corpus.

In this example, for simplicity, we'll apply K = 1

Algorithm:

Step 1: Let'due south import the libraries get-go:

Step 2:

Nosotros implement class KNN_NLC_Classifier() with standard functions 'fit' for training and 'predict' for predicting on test data. KNN uses lazy grooming which ways all ciphering is deferred till prediction. In fit method, nosotros just assign the grooming data to form variables — xtrain and ytrain. No computation is needed.

Please note grade accepts two hyper parameters k and document_path. The parameters thou is same as traditional KNN algorithm. G denotes how many closest neighbors volition be used to make the prediction. To begin with, nosotros'll use thousand=1. The other parameter explains the type of distance to exist used betwixt two texts.

In prediction office, for every row of text data, we compare the text with every row of train information to go similarity score. So prediction algo is O(m * n) where grand = no. of rows in training data and n is no. of rows of test data for which prediction needs to be done.

As we iterate through every row of training to go similarity score, nosotros apply custom office document_similarity that accepts ii texts and returns the similarity score between them (0 & ane). The college similarity score indicates more similarity betwixt them.

Step 3: Next, we implement the document similarity function. To implement this, we use synsets for each text/document.

Nosotros convert each certificate text into synsets by office doc_to_synsets. This function returns a list of synsets of each token/word in text.

Step 4: Now, we implement the part similarity score which provides the score between two texts/documents using their synsets:

This part accepts the hyper parameter distance_type which tin be of value 'path', 'wup' or 'jcn'. Depending upon this parameter appropriate similarity method is called from nltk library.

Optional: Please note we tin can implement other ways to calculate the similarity score from nltk library every bit per snippet below. The unlike functions are based on dissimilar corpus such as brownish, genesis etc. And unlike algorithms tin be used to calculate similarity scores such as jcn, wup, res etc. Documentation for these functions can exist plant at nltk.org

Step 5: Now, nosotros can implement the doc similarity which calculates the similarity between doc1 & doc2 and vice-versa and them averages them.

Optional: Beneath is the test to cheque the code then far:

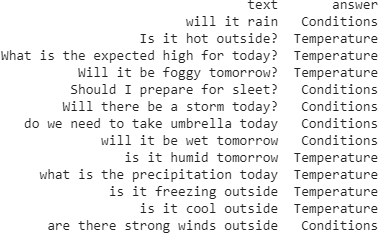

Step half dozen: Now we can apply the classifier to railroad train and predict texts. For that, commencement import a dataset. We'll use the demo dataset available at Watson NLC Classifier Demo. The dataset classifies the texts into 2 catagories — Temperature and Weather. The dataset is very pocket-sized appx. fifty texts simply.

Step 7: Pre-procedure the data. Nosotros'll exercise following preprocessing —

- Remove stopwords (commonly used words such equally 'the', 'i', 'me' etc. ). For this, nosotros'll download list of stopwords from nltk and add additional stopwords.

- Catechumen all texts/documents into lower example

- Consider only text data past ignoring numeric content etc.

We'll load the terminal training information into X_train and labels into y_train

import re

nltk.download('stopwords')

s = stopwords.words('english')

#add additional stop words

due south.extend(['today', 'tomorrow', 'exterior', 'out', 'there']) ps = nltk.wordnet.WordNetLemmatizer()

for i in range(dataset.shape[0]):

review = re.sub('[^a-zA-Z]', ' ', dataset.loc[i,'text'])

review = review.lower()

review = review.split() review = [ps.lemmatize(discussion) for discussion in review if not word in southward]

review = ' '.bring together(review)

dataset.loc[i, 'text'] = review X_train = dataset['text']

y_train = dataset['output'] impress("Below is the sample of training text afterward removing the finish words")

print(dataset['text'][:x])

Step 8: Now, nosotros create instance of KNN classifier form that we created earlier and use the defined methods 'fit' to railroad train (lazy) and then employ the predict function to brand prediction. Nosotros'll use some sample text to make the prediction.

Nosotros go the following prediction which depends on the training data.

So, we have divers the KNN Nearest algorithm for text nomenclature using nltk. This works very well if we accept practiced grooming data. In this example, nosotros have very small preparation data of 50 texts only but it still gives decent results. As we use nltk synsets (synonyms), the algorithm performs well even if the word/texts used in prediction are not in that location in training set because the algorithm uses synonyms to summate the similarity score.

Improvements for hereafter: This algorithm uses K = 1. Further improvements on this algorithm can exist make to implement information technology for K generic variables. We tin can as well implement the 'proba' function in class to provide probabilities. Nosotros'll implement these features in next version of this algorithm :-)

References & Further Readings:

CourseRA — Practical Text Mining in Python

Can You Use A Knn Rechargr Kit On S&b Filter,

Source: https://towardsdatascience.com/text-classification-using-k-nearest-neighbors-46fa8a77acc5

Posted by: williamsundis1972.blogspot.com

0 Response to "Can You Use A Knn Rechargr Kit On S&b Filter"

Post a Comment